Machine learning (ML) has become a transformative force in the world of artificial intelligence (AI), powering a wide range of technologies from recommendation systems and self-driving cars to healthcare diagnostics and natural language processing. As machine learning continues to evolve, it’s crucial to understand the various types of machine learning approaches that make it so powerful.

These approaches can be categorized into several distinct paradigms, including supervised machine learning, unsupervised machine learning, semi-supervised learning, reinforcement learning, and others like transfer learning, federated learning, and deep reinforcement learning. Each of these paradigms offers unique strengths and is suited to specific problem domains.

In this article, we will explore these machine-learning techniques in detail, highlighting their key characteristics, and providing insights into emerging trends like few-shot learning, active learning, and meta-learning.

1. Supervised Machine Learning

Supervised machine learning is perhaps the most commonly used form of machine learning. In supervised learning, the model is trained on labeled data, which means that each input in the training dataset has a corresponding output or label. The objective is for the model to learn the mapping between inputs and outputs so that it can accurately predict labels for unseen data.

The core of supervised learning involves two main tasks: classification and regression. In classification, the model learns to categorize inputs into predefined classes, while in regression, it learns to predict continuous values. For instance, in a classification problem, the task could be to determine whether an email is spam or not, while in a regression problem, the task might be to predict the price of a house based on its features (size, location, etc.).

Popular algorithms in supervised learning include decision trees, support vector machines (SVM), k-nearest neighbors (KNN), and neural networks.

Use Cases

- Speech recognition

- Email spam filtering

- Credit scoring

2. Unsupervised Machine Learning

In contrast to supervised learning, unsupervised machine learning involves training models on data that is not labeled. The goal of unsupervised learning is to discover patterns, relationships, or structures in the data. Without predefined labels, the model must learn to identify inherent structures or clusters within the data on its own.

The primary tasks in unsupervised learning are clustering and dimensionality reduction. In clustering, the model groups data into clusters based on similarity, such as segmenting customers into different groups based on purchasing behavior. In dimensionality reduction, the goal is to reduce the number of features in the dataset while preserving important information, often using techniques like Principal Component Analysis (PCA) or t-SNE.

Use Cases

- Customer segmentation

- Anomaly detection

- Feature extraction

3. Semi-supervised Learning

Semi-supervised learning combines aspects of both supervised and unsupervised learning. In this approach, the model is trained on a small amount of labeled data alongside a much larger amount of unlabeled data. Semi-supervised learning is particularly useful when labeling data is expensive or time-consuming, but a large amount of unlabeled data is readily available.

By leveraging both labeled and unlabeled data, semi-supervised learning can outperform traditional supervised learning when labeled data is scarce. It is often used in fields like computer vision and natural language processing, where labeled datasets are often limited.

Use Cases

- Image classification

- Speech recognition

- Text categorization

4. Reinforcement Learning

Reinforcement learning (RL) is a type of machine learning where an agent learns to make decisions by interacting with an environment. The agent takes action and receives feedback in the form of rewards or penalties, which it uses to improve its future behavior. Over time, the goal is to maximize cumulative reward by learning an optimal policy.

RL is particularly powerful in tasks that involve sequential decision-making, such as robotics, game playing, and autonomous driving. The most well-known example of RL is DeepMind’s AlphaGo, which used RL to defeat human champions in the game of Go.

Key Components of RL:

- Agent: The learner or decision maker.

- Environment: The external system that the agent interacts with.

- Action: The choices made by the agent.

- Reward: The feedback the agent receives is based on its actions.

- Policy: The strategy the agent uses to decide its next move.

Use Cases

- Game AI (e.g., AlphaGo)

- Robotics

- Autonomous vehicles

5. Transfer Learning

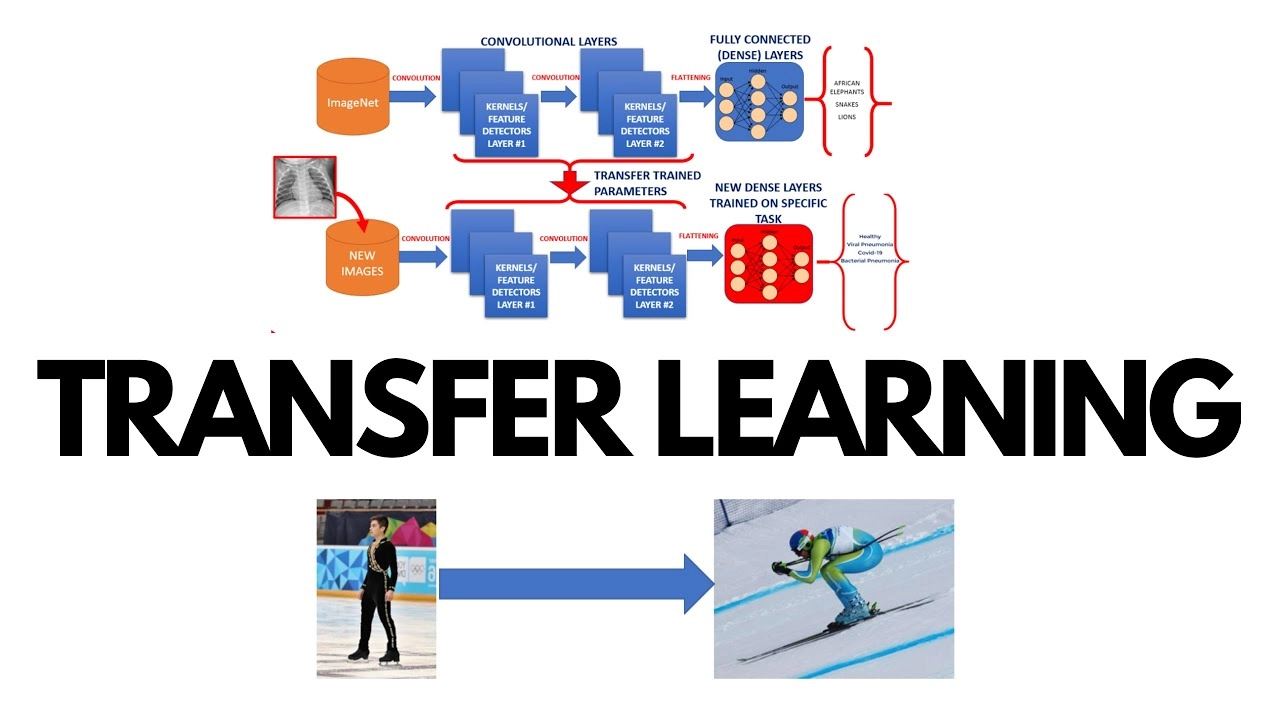

Transfer learning involves transferring knowledge gained from one task to improve learning in a different but related task. This technique is particularly useful when data are scarce for the target task but an abundance of data for a similar task. Transfer learning is often used in deep learning, where a model trained on a large dataset for a certain task (e.g., image classification) can be adapted to perform a new task with less data (e.g., medical image analysis).

In transfer learning, the lower layers of a neural network (which typically capture low-level features like edges and textures) can be reused across different tasks, while only the higher layers (which capture task-specific features) need to be retrained.

Use Cases

- Image recognition

- Natural language processing (e.g., BERT, GPT)

- Medical diagnostics

6. Federated Learning

Federated learning is a decentralized machine learning approach that enables multiple devices to collaboratively train a model without sharing raw data. Instead of sending data to a central server, each device trains the model locally and only shares the model updates. This is particularly important in privacy-sensitive applications, where data cannot be shared due to privacy or regulatory concerns.

Federated learning has gained significant attention in applications like mobile phones, where devices can train models on user data locally, enabling personalized experiences without compromising privacy.

Use Cases

- Mobile phone applications (e.g., keyboard predictions)

- Healthcare applications

- Smart home devices

7. Deep Reinforcement Learning

Deep reinforcement learning (DRL) is an advanced form of reinforcement learning that leverages deep neural networks to handle high-dimensional state and action spaces. In DRL, the agent uses deep learning techniques to approximate value functions or policies, enabling it to tackle complex problems that traditional RL methods cannot handle efficiently.

Deep reinforcement learning has been a breakthrough in many real-world applications, particularly in domains requiring high levels of autonomy, such as robotics and gaming.

Use Cases

- Autonomous vehicles

- Game playing (e.g., AlphaZero)

- Robotics

8. Few-shot Learning

Few-shot learning is a subfield of machine learning focused on training models that can learn new tasks with only a few examples. In traditional machine learning, a large dataset is usually required to train an effective model. However, few-shot learning aims to enable models to generalize from only a few training instances, making it ideal for scenarios where data is scarce.

Few-shot learning often utilizes techniques like meta-learning (learning to learn) and transfer learning to achieve this feat.

Use Cases

- Personalized recommendations

- Medical image classification

- Speech recognition

9. Active Learning

Active learning is a type of machine learning where the model can actively query the user (or an oracle) for labels on specific instances. This is typically used when labeling is expensive, and the goal is to minimize the number of labeled instances required to achieve high performance. By selecting the most informative instances for labeling, active learning allows models to learn efficiently with fewer labeled examples.

Use Cases

- Text classification

- Image annotation

- Speech recognition

10. Meta-learning

Meta-learning, or “learning to learn,” is an approach where models are trained to improve their learning process. The goal is to create models that can adapt quickly to new tasks with minimal data. Meta-learning algorithms are designed to identify patterns in learning processes across different tasks and apply this knowledge to new situations.

Meta-learning is often used in few-shot learning and is a critical area of research for developing highly adaptable machine-learning models.

Use Cases

- Personalized AI assistants

- Autonomous robots

- Recommendation systems

Conclusion

Machine learning has evolved into a complex and dynamic field, with various approaches offering distinct advantages depending on the problem at hand. From supervised machine learning, where labeled data is used to train models, to unsupervised learning, where patterns are discovered without predefined labels, each paradigm plays a key role in pushing the boundaries of AI.

Techniques like reinforcement learning enable autonomous decision-making, while transfer learning and federated learning help in leveraging prior knowledge and maintaining privacy. Emerging trends such as deep reinforcement learning, few-shot learning, and meta-learning represent the next frontier in making machine learning models more adaptive, efficient, and capable of learning from limited data.

By understanding these approaches and their respective applications, we can better appreciate the immense potential machine learning holds for revolutionizing industries across the globe. As AI continues to advance, these methodologies will undoubtedly play an increasingly important role in shaping the future of intelligent systems. For more information please get in touch